Quark

Real-time, High-resolution, and General Neural View Synthesis (SIGGRAPH 2024)

Quark - Real-time, High-resolution, and General Neural View Synthesis

John Flynn, Michael Broxton, Lukas Murmann, Lucy Chai, Matthew DuVall, Clément Godard, Kathryn Heal, Srinivas Kaza, Stephen Lombardi, Xuan Luo, Supreeth Achar, Kira Prabhu, Tiancheng Sun, Lynn Tsai, Ryan Overbeck

paper :

https://arxiv.org/abs/2411.16680

project website :

https://quark-3d.github.io/

reference :

Presentation of https://charlieppark.kr from 3D-Nerd Community

Contribution

- Architecture :

- 3D space에서 ray를 쏘거나(NeRF) Gaussian list를 구해서(3DGS) alpha-compositing하는 게 아니라

layered RGB image(or depth map)를 구해서 alpha-compositing -

target view가 어떤 input view에 얼만큼 attention해야 하는지를iteratively refine하여

Blend Weights를 구해서 input view들을 interpolate하는 방식 - refinement로 Blend Weights 구해서

input images를 blend하여 layered RGB images를 구하므로

input view가 멀리멀리 sparse하게 떨어져 있어야

recon.할 때 모든 영역 커버 가능

- 3D space에서 ray를 쏘거나(NeRF) Gaussian list를 구해서(3DGS) alpha-compositing하는 게 아니라

-

Generalizable:- pre-trained model 가져온 뒤

pre-trained model이 학습하지 못했던unseen scene에 대해

fine-tuning 없이refinement로

layered depth map 쫘르륵 얻어내서 novel view recon. 가능!

- pre-trained model 가져온 뒤

-

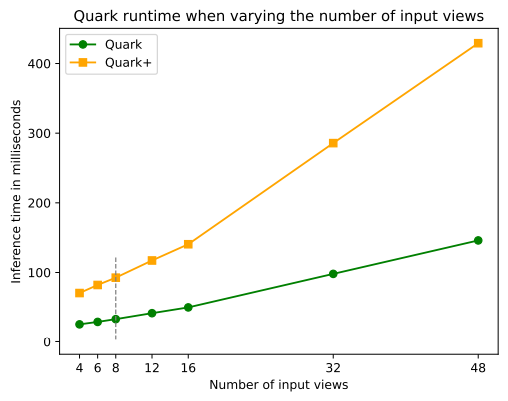

Real-timeReconstructionand Rendering :- 3DGS에서는 real-time rendering이었는데

본 논문은 recon. 자체도 real-time

(inference하는 데 총 33ms at 1080p with single A100 GPU)

- 3DGS에서는 real-time rendering이었는데

Related Works

- Generalizable :

- IBRNet :

rendering 시간은 오래 걸리지만 generalizable - ENeRF :

cost volume, depth-guided sampling, volume rendering 사용 - GPNR :

2-view VFT, Epipolar Transformer 사용 - CO3D - NeRFormer :

반복 between attention on feature-dim. and attention on ray-direction-dim.

- IBRNet :

- Quark의 직계 조상 paper :

- DeepView

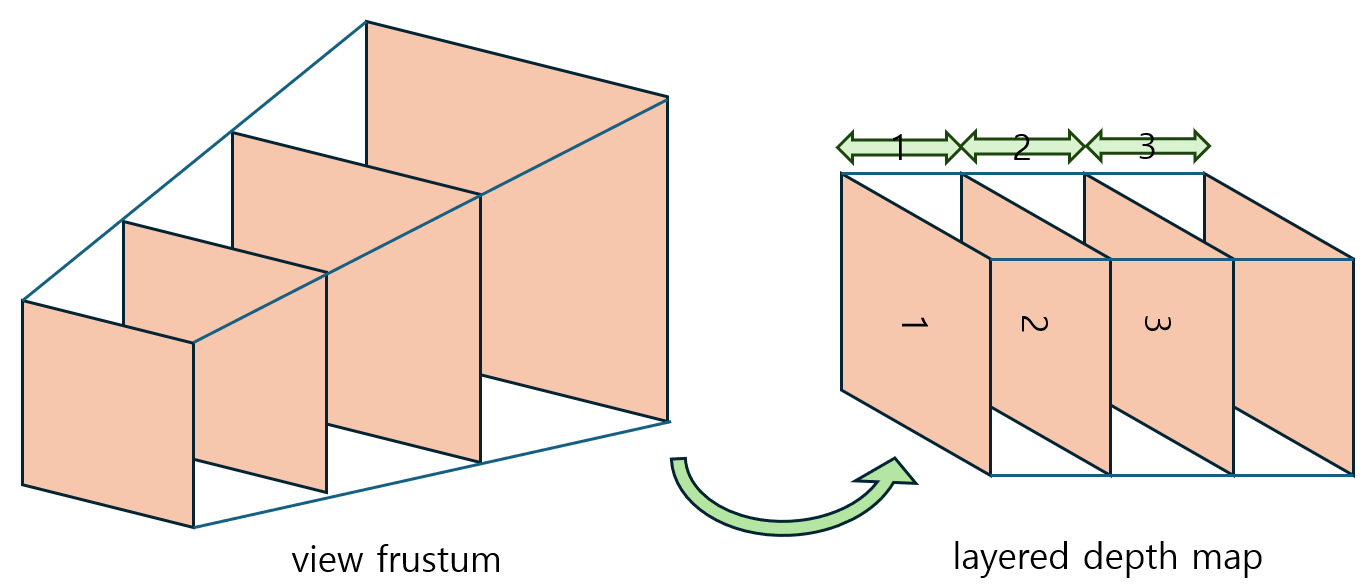

[1] :- MPI (여러 depth에 대해 image를 중첩한 multi-plane image)

- 한계 : input view와 target view 간의 camera 이동이 크면 안 됨

- Immersive light field video with a layered mesh representation

[2] :- MSI (여러 depth에 대해 곡면 image를 중첩한 multi-spherical image) (= layered mesh)

- DeepView

Overview

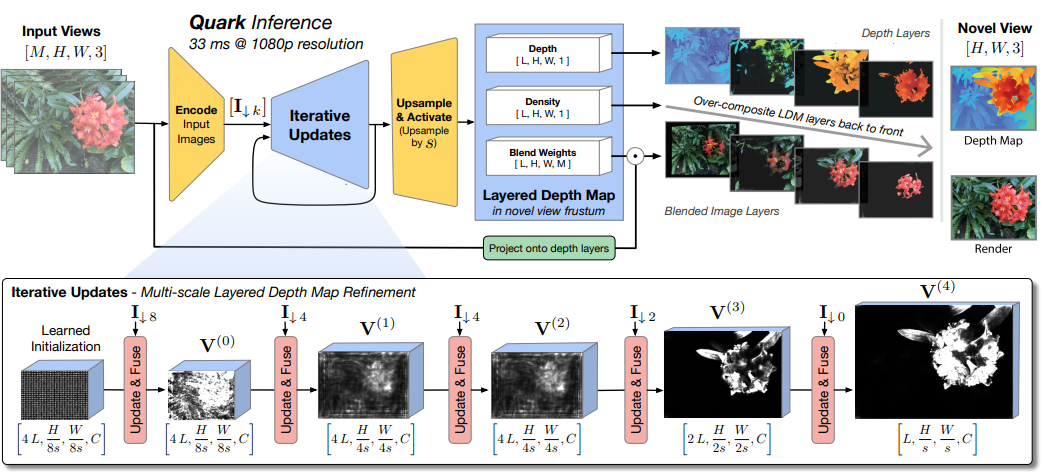

- I/O :

- input : sparse multi-view images (\(\in R^{M \times H \times W \times 3}\))

(sensitive to view selection)

(pose 정보 필요) - output : novel view image

- Quark는 pretrained model (pretrained with 8 input views of scenes(Spaces, RFF, Nex-Shiny, and SWORD)) 가져와서

unseen scene에 대한 refinement로 novel target view synthesis 가능 (generalizable)- Spaces : Quark의 직계 조상 격인 DeepView에서 사용한 dataset

- RFF : NeRF에서 사용한 Real Forward Facing dataset

- Nex-Shiny : NeX에서 사용한 shiny object이 포함된 dataset

- SWORD : real-world scene dataset

- input : sparse multi-view images (\(\in R^{M \times H \times W \times 3}\))

- Architecture :

U-Net style- Encoder :

Obtain feature pyramid \(I_{\downarrow 8}, I_{\downarrow 4}, I_{\downarrow 2}, I_{\downarrow 0}\) from input image - Iterative Updates :

- pre-trained model을 가져와서 학습하는데,

layered depth map을 업데이트하는 방법은

gradient descent 이용한fine-tuning이 아니라

input view feature 이용한refinement임!! - U-Net skip-connection과 비슷하지만

Update & Fuse 단계가 novel

(아래에서 별도로 설명)

- pre-trained model을 가져와서 학습하는데,

- Upsample & Activate :

- image resolution으로 upsample한 뒤

Layered Depth Map at target view 구함- Depth \(d \in R^{L \times H \times W \times 1}\)

(이 때, depth map은 linear in disparity (가까운 high-freq. 영역에서 더 촘촘히)) - Opacity \(\sigma \in R^{L \times H \times W \times 1}\)

- Blend Weights \(\beta \in R^{L \times H \times W \times M}\)

by attention softmax weight

- Depth \(d \in R^{L \times H \times W \times 1}\)

- image resolution으로 upsample한 뒤

- Rendering :

- input images \(\in R^{M \times H \times W \times 3}\) 를 Layered Depth Map (target view)로 back-project한 뒤

Blend Weights \(\beta\) 로 input images를 blend해서 per-layer RGB 얻음 - Opacity \(\sigma\) 로 per-layer RGB를 alpha-compositing해서 final RGB image at target view 얻고,

Opacity \(\sigma\) 로 Depth \(d\) 를 alpha-compositing해서 Depth Map 얻음 - training할 때는 stadard differentiable rendering 사용하지만

inference할 때는 1080p resol. at 1.3 ms per frame 위해 CUDA-optimized renderer 사용

- input images \(\in R^{M \times H \times W \times 3}\) 를 Layered Depth Map (target view)로 back-project한 뒤

- Encoder :

Method

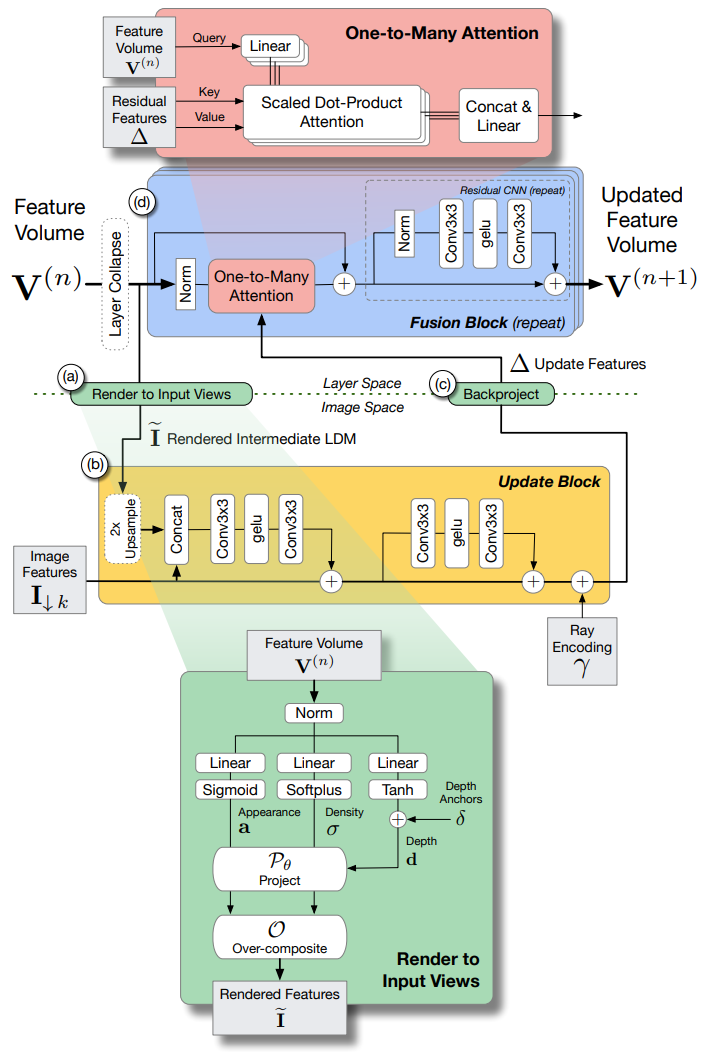

- Update & Fuse :

- Step 1) Render to Input Views

- from

layer space (target view)toimage space (input view)

(feature pyramid \(I_{\downarrow k}\) 와 합치기 위해!) - feature volume \(V^{(n)}\)

\(\rightarrow\) obtain appearance \(a\), density \(\sigma\), depth map \(d\)

(depth map \(d = \delta + \text{tanh}\) 는 depth anchor \(\delta\) 근처의 depth)

\(\rightarrow\) project from target-view into input-view by \(P_{\theta}\)

\(\rightarrow\) obtain rendered feature \(\tilde I\) by alpha-compositing \(O\) at input-view

(\(\tilde I\) : intermediate LDM(layered depth map))

- from

- Step 2) Update Block

-

rendered feature\(\tilde I\) 를

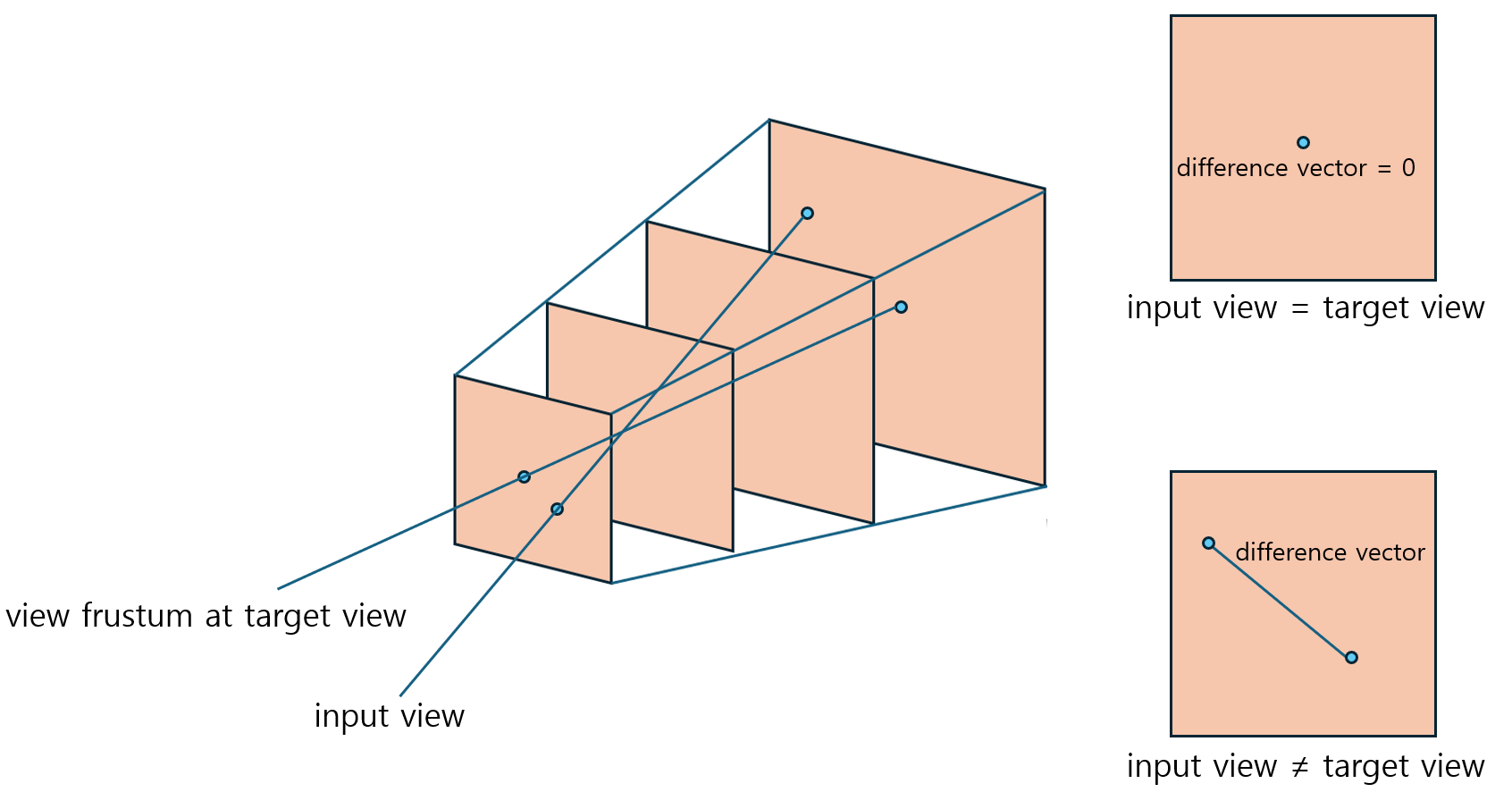

feature pyramid\(I_{\downarrow k}\),input view-direction\(\gamma\) 등 input image에 대한 정보와 섞음- input view-direction 넣어줄 때 Ray Encoding \(\gamma\) 수행 :

- obtain difference vector (아래 그림 참고)

(input view가 target view에서 멀리 떨어져 있을수록 값이 큼)

\(\rightarrow\) tanh and Sinusoidal PE - tanh 사용하므로

difference vector가 0 근처일 때

즉, input view가 target view 근처일 때 gradient 많이 반영 - input view’s ray가 frustum 밖으로 벗어나더라도

near, far plane과의 교점을 구할 수 있으므로

Ray Encoding 가능 - view-direction 넣어줘야

view-dependent color 만들 수 있고

reflection, non-lambertian surface 잘 구현 가능

- obtain difference vector (아래 그림 참고)

- input view-direction 넣어줄 때 Ray Encoding \(\gamma\) 수행 :

-

- Step 3) Back-project

- from

image space (input view)tolayer space (target view)

(feature volume \(V^{(n)}\) 과 합치기 위해!) - back-project from input-view into target-view by \(P_{\theta}^{T} (I, d)\)

\(\rightarrow\) obtain residual feature \(\Delta\)

- from

- Step 4) One-to-Many Attention

-

feature volume\(V^{(n)}\) 을query로,

Step 1~3)에서 얻은residual feature\(\Delta\) 를key, value로 하여

One-to-Many attention 수행하여

updated feature volume \(V^{(n+1)}\) 얻음

Then, target view가 input view의 feature들을 aggregate하여 이용할 수 있게 됨!!

즉, target view가 어떤 input view에 얼만큼 attention해야 하는지!-

query:target view정보 at target view space -

key, value:input view정보 at target view space -

One-to-Many attention:- cross-attention과 비슷하지만

redundant matrix multiplication 없애서

complexity 줄여서

real-time reconstruction에 기여! - \(\text{MultiHead}(Q, K, V) = \text{concat}(\text{head}_{1}, \cdots, \text{head}_{h}) W^{O}\)

where \(\text{head}_{i} = \text{Attention}(QW_{i}^{Q}, KW_{i}^{K}, VW_{i}^{V})\) 식을 써보면

\(W_{i}^{Q} (W_{i}^{K})^{T}\) 항에서 \(W^{Q}\) 와 \(W^{K}\) 가 redundant 하고

\(\text{concat}(\cdots W_{i}^{V}) W^{O}\) 항에서 \(W^{V}\) 와 \(W^{O}\) 가 redundant 하므로

\(\text{head}_{i} = \text{Attention}(QW_{i}^{Q}, K, V)\) 로 바꿔서

\(W^{Q}\) 와 \(W^{O}\) 만 사용

- cross-attention과 비슷하지만

-

-

- Step 1) Render to Input Views

difference vector for input view-direction (Ray Encoding)

Result

- Training :

- Dataset : Spaces, RFF, Nex-Shiny, SWORD

- Loss : \(\text{10 * L1} + \text{LPIPS}\)

- Input : 8 views (randomly sampled from 16 views nearest to object)

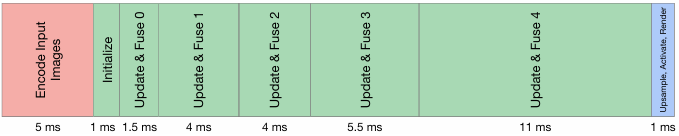

- Inference time : recon.까지 포함해서 총 33ms at 1080p single A100 GPU

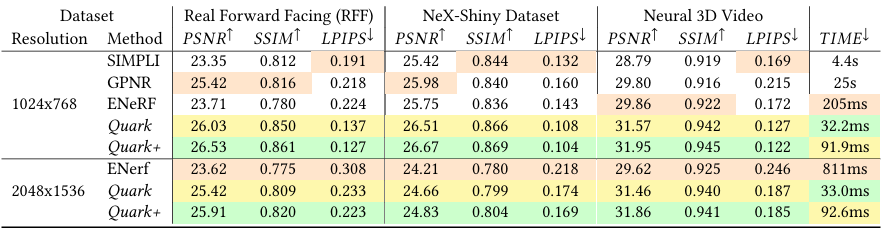

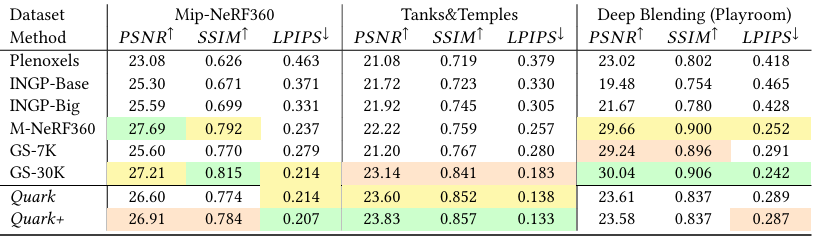

Generalizable method와의 비교

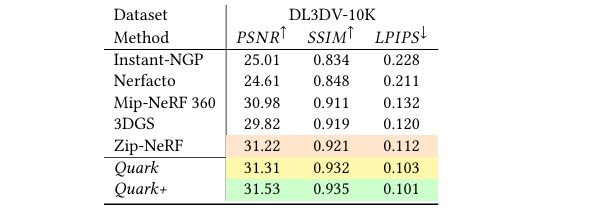

Non-Generalizable method와의 비교

Discussion

- Limitation :

- view selection :

training할 때 sparse(8개) input views를 사용하는데,view selection에 매우 민감 (중요함) (heuristic) - Blend Weights :

target view RGB image를 rendering하기 전에 input RGB images를 blend하는데,-

view dependency를 잘 캡처 못한다

\(\rightarrow\) Ray Encoding으로 해소하긴 함 - input images의

focal length가 각기 다르면 잘 recon.하지 못한다

-

- sparse input :

real-time rendering 뿐만 아니라 real-time recon. 위해

적은 수(8 ~ 16)의sparse inputs사용 - light, shadow 고려 X

- conv. network를 일반화에 사용했을 때 생기는 깨지는 artifacts 발생 (홈페이지 영상 참고)

- view selection :