State Space Model

SSM

State Space Model

참고 논문 :

https://arxiv.org/abs/2406.07887

참고 강연 :

by NVIDIA Wonmin Byeon

Abstract

- Large Language Models (LLMs) are usually based on

Transformerarchitectures.- Transformer-based models 장점 :

highlyparallelizable

can modelmassive amounts of data - Transformer-based models 단점 :

significantcomputational overheaddue to thequadratic self-attentioncalculations, especially on longer sequences

large inference-timememory requirementsfrom thekey-value cache

- Transformer-based models 장점 :

- More recently,

State Space Models (SSM)like Mamba have been shown to have fast parallelizable training and inference as an alternative of Transformer.

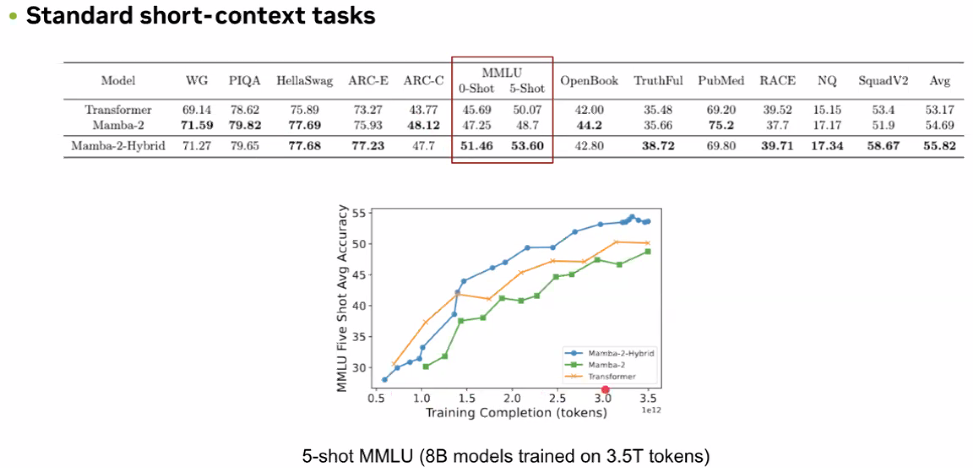

In this talk, I present the strengths and weaknesses ofMamba, Mamba-2, and Transformer modelsat larger scales. I also introduce ahybrid architecture consisting of Mamba-2, attention, and MLP layers.

While pure SSMs match or exceed Transformers on many tasks, they lag behind Transformers on tasks that requirestrong copyingorin-context learningabilities.

In contrast, the hybrid model closely matches or exceeds the Transformer on all standard and long-context tasks and is predicted to be up to 8x faster when generating tokens at inference time.

Is Attention All We Need?

- Transformer :

- fast training due to parallelization

- slow inference for long sequence(context)

- key-value cache can improve speed, but increase GPU memory

- RNN :

- slow training due to no parallelization

- fast inference because scale linearly with sequence length

- Mamba :

- fast training

- fast inference because scale linearly with sequence length and can deal with unbounded context

-

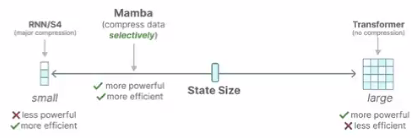

SSM or RNN :

state = fixed-sized vector (compression)

high efficiency, but low performance - Transformer :

cache of entire history (no compression)

high performance, but low efficiency

Mamba: Linear-Time Sequence Modeling with Selective State Spaces

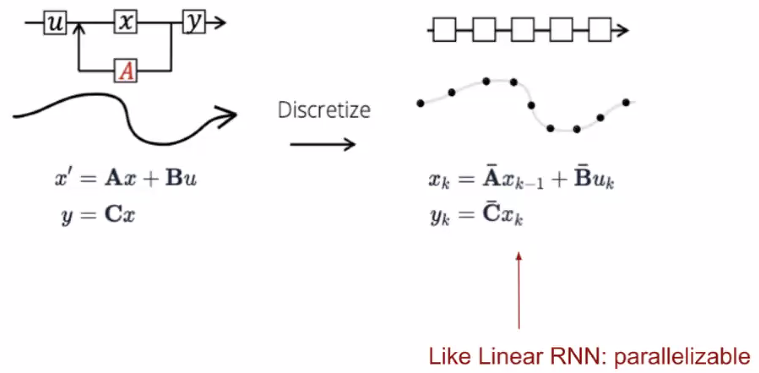

- SSM

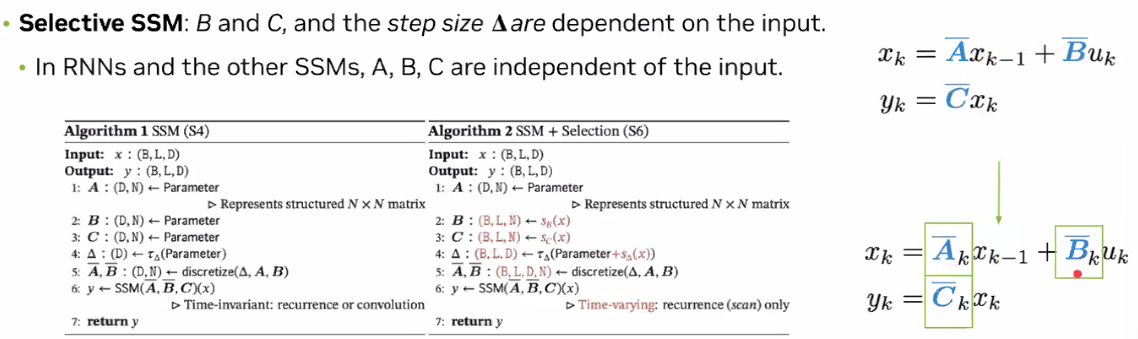

- Selective SSM :

matrix B, C and step size are dependent on the input

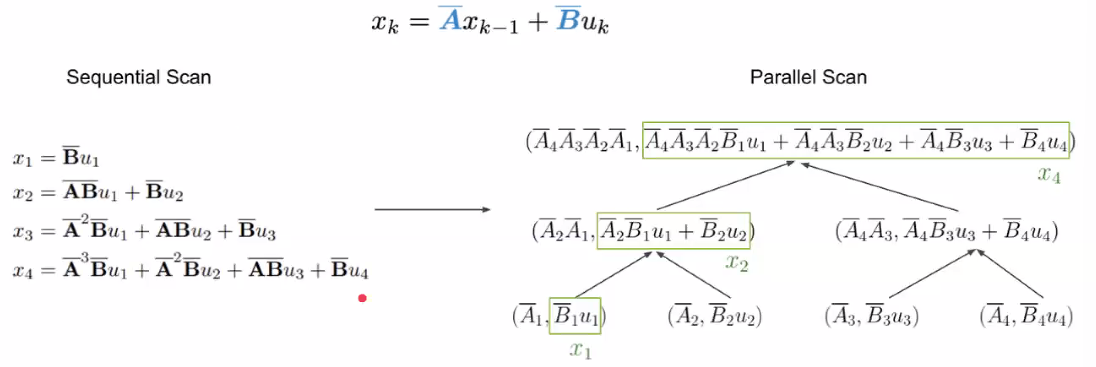

- Parallel scan :

The order does not matter through the associative property, so can calculate sequences in part and iteratively combine them

- Hardware-aware implementation :

minimize copying between RAMs

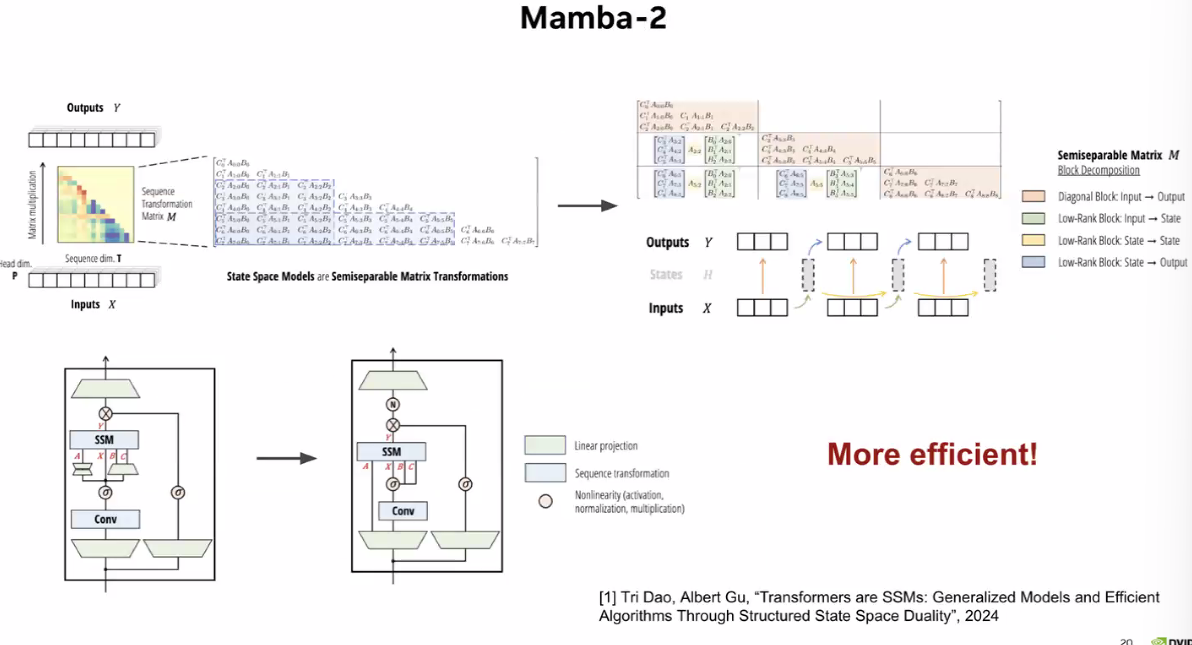

Mamba-2

- Mamba에서 Main Bottleneck이 Parallel scan 부분이었는데,

Mamba-2는 divide input into chunks 등 architecture 개선으로 이를 해결하고자 했음

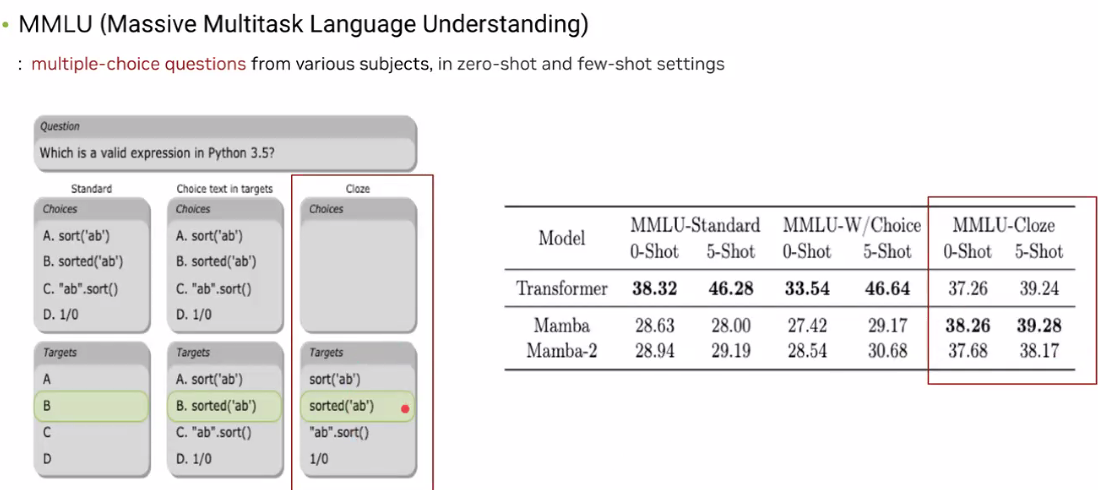

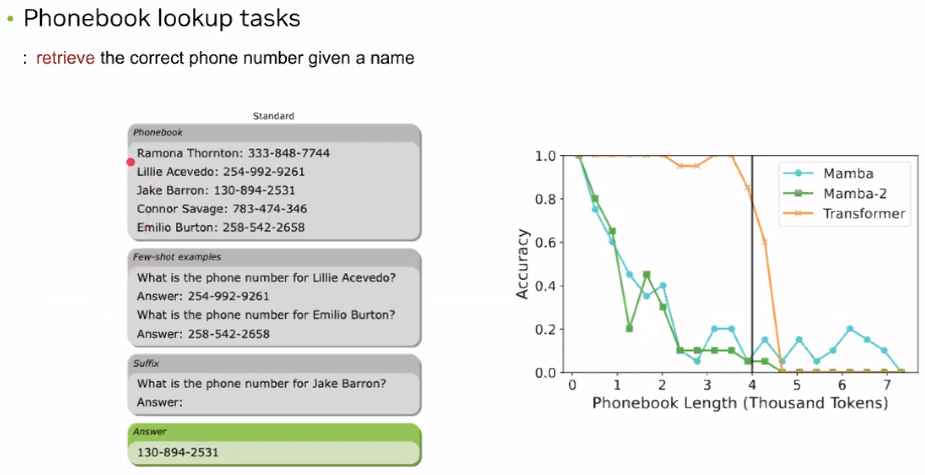

Limitations of Mamba

- Poor at MMLU and Phonebook task

아래를 요구하는 task에 대해서는 Mamba가 잘 못함- in-context learning

- info. routing between tokens

- copying from the context (bad on long-context tasks)

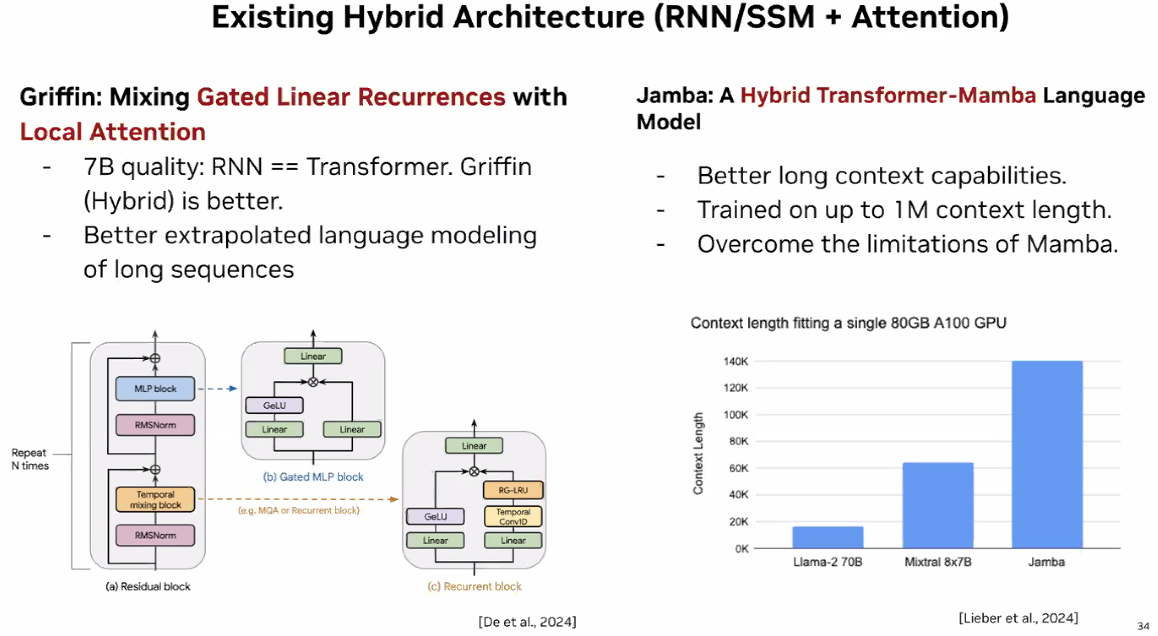

Hybrid Architecture of Mamba and Transformer

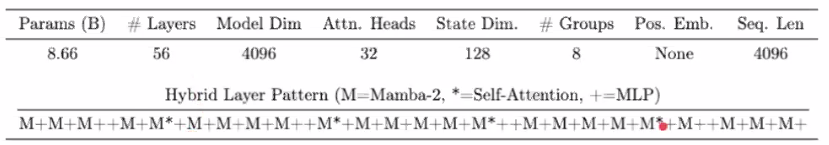

- Our Hybrid Mamba-Transformer Model

- Minimize the number of Attention Layers and Maximize the number of MLPs

- Does not necessarily need Rotary Position Embedding (RoPE)

- evenly spread attention and MLP layers

- Place Mamba layer at the beginning, so has no position embedding

- Group-Query Attention (GQA) makes more efficient

- Global Attention makes better performance

- Mamba-2 Hybrid

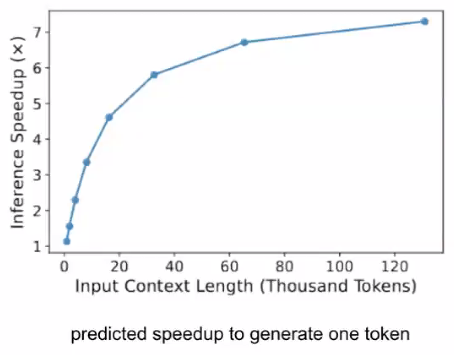

Inference Speed is fast

Now, states in Mamba can understand longer history!

Attention Layer is bottleneck at Hybrid model,

so Context Length가 길어질수록 Speedup 증가율은 줄어듬

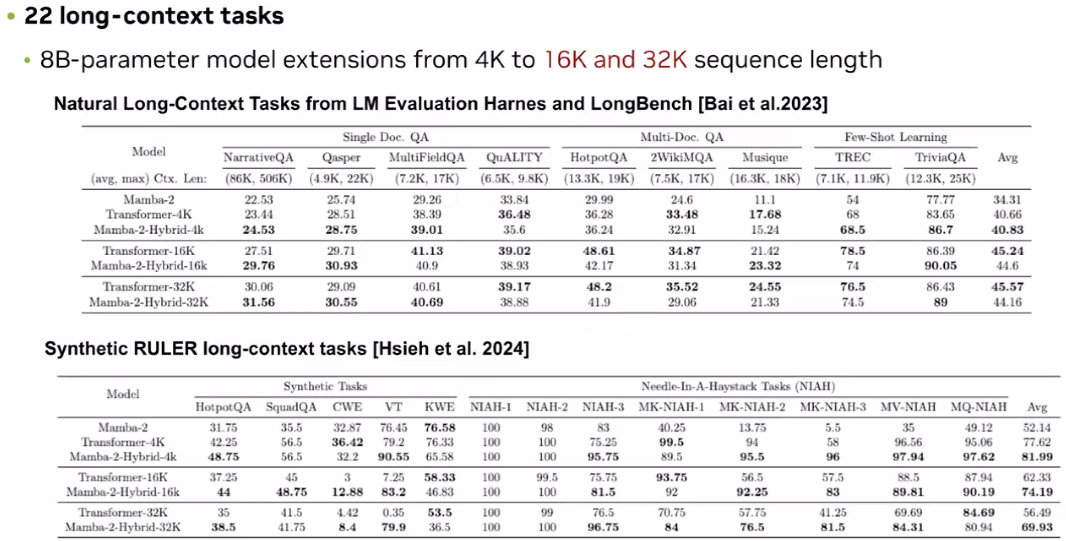

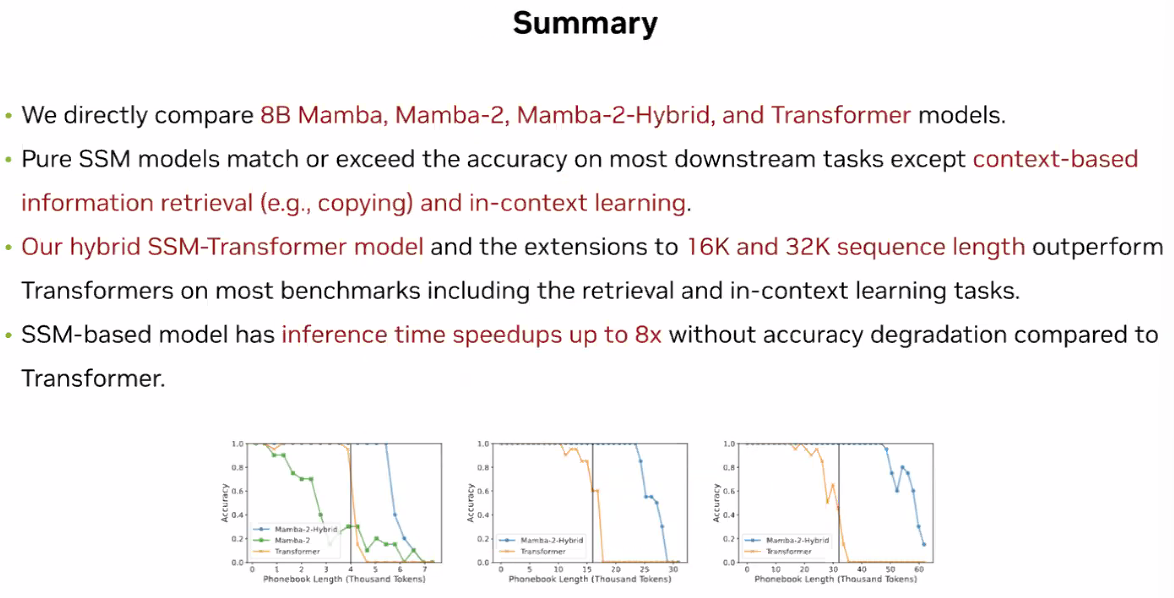

Summary

왼쪽부터 4K, 16K, 32K-based models

Mamba-2 Hybrid는 Transformer와 달리 Quadratic calculation까지 필요 없고 inference 빠름

but, Attention Layer가 Bottleneck이듯이 해결해야 할 사항들이 남아 있어 앞으로도 발전 가능성 있음