3DDST

Generating Images with 3D Annotations Using Diffusion Models (ICLR 2024 Spotlight)

3DDST - Generating Images with 3D Annotations Using Diffusion Models (ICLR 2024 Spotlight)

Wufei Ma, Qihao Liu, Jiahao Wang, Angtian Wang, Xiaoding Yuan, Yi Zhang, Zihao Xiao, Guofeng Zhang, Beijia Lu, Ruxiao Duan, Yongrui Qi, Adam Kortylewski, Yaoyao Liu, Alan Yuille

paper :

https://arxiv.org/abs/2306.08103

project website :

https://ccvl.jhu.edu/3D-DST/

핵심 :

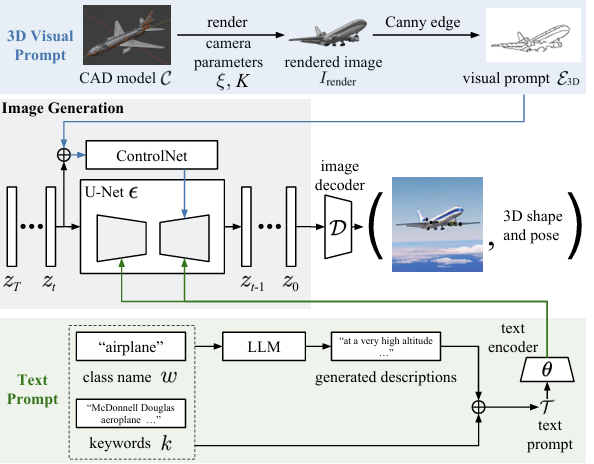

3D structure(shape) 정보를 담고 있는, CAD model로부터 render한 image의 edge map을

ControlNet의 visual prompts (3D geometry control)로 넣어줌으로써

Diffusion model이 특정 3D structure를 가진 image를 generate할 수 있게 함!

즉, Diffusion model generates new images where its 3D geometry can be explicitly controlled

결과적으로 Diffusion model로 data generation할 때 we can conveniently acquire GT 3D annotations for the generated 2D images

Background

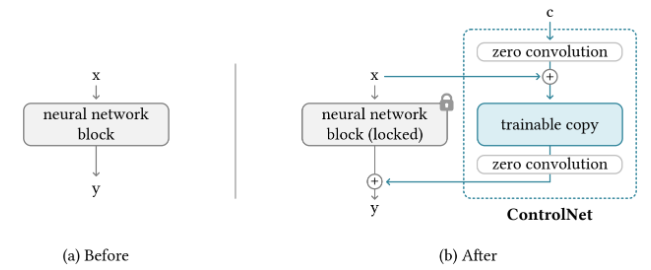

- ControlNet :

- 대규모 Diffusion model (e.g. Stable Diffusion)의 weight를 trainable copy와 locked copy로 복제한 뒤

locked copy는 수많은 internet-scale images로 학습한 network 능력을 보존하고

trainable copy는 specific task별 dataset으로 학습하여 control을 학습- 원래 weight를 freeze하고 이를 copy해서 사본 weight를 학습하는 이유는

dataset (for specific task)이 작을 때의 overfitting을 방지하고 internet-scale로 학습한 대형 Diffusion model의 품질을 보존 - zero-convolution : 처음에 weight, bias가 0으로 초기화되고, 점점 학습

- optimization 완전 처음에는 zero-convolution output이 0이라서 ControlNet이 없는 것처럼 작동하였다가

점점 zero-convolution의 weight, bias가 학습되면서 ControlNet이 쓰임

- optimization 완전 처음에는 zero-convolution output이 0이라서 ControlNet이 없는 것처럼 작동하였다가

-

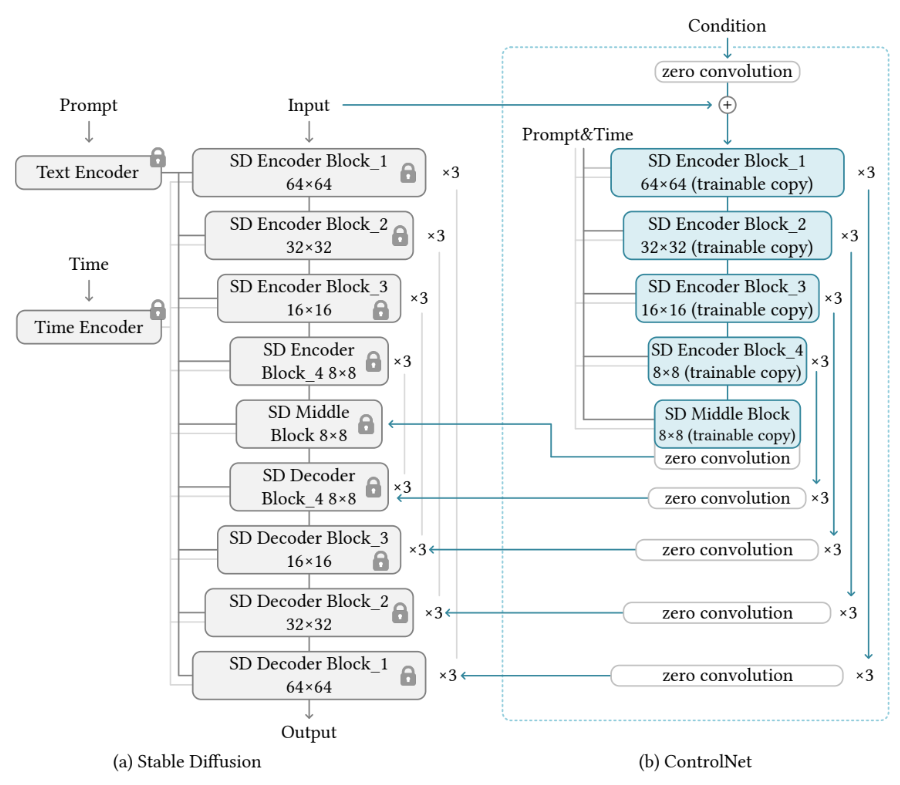

ControlNet은 원래 Diffusion model의encoder의 trainable copy로 구성되어 있고,

input과control을ControlNet에 입력으로 넣어서 나온ControlNet의 출력을

원래 Diffusion model의decoder에 넣어줌

- 원래 weight를 freeze하고 이를 copy해서 사본 weight를 학습하는 이유는

- 대규모 Diffusion model (e.g. Stable Diffusion)의 weight를 trainable copy와 locked copy로 복제한 뒤

- ControlNet for Stable Diffusion :

- Component :

- Text Encoder : OpenAI CLIP

- Time (diffusion time-step) Encoder : Positional Encoding

- Stable Diffusion transforms an 512x512 image into a 64x64 latent image,

so use small network to also transform an image control \(c_{i}\) into a 64x64 feature map

- Role :

- ControlNet controls each level of U-Net of Stable Diffusion

- Efficient since

원래 모델은 잠겨 있기 때문에 원래 모델의 encoder에 대한 기울기 계산은 필요하지 않아서

원래 모델에서 기울기 계산의 절반을 피할 수 있으므로

학습 속도 빨라지고 GPU 메모리도 절약 가능

- Component :

Method

Overview

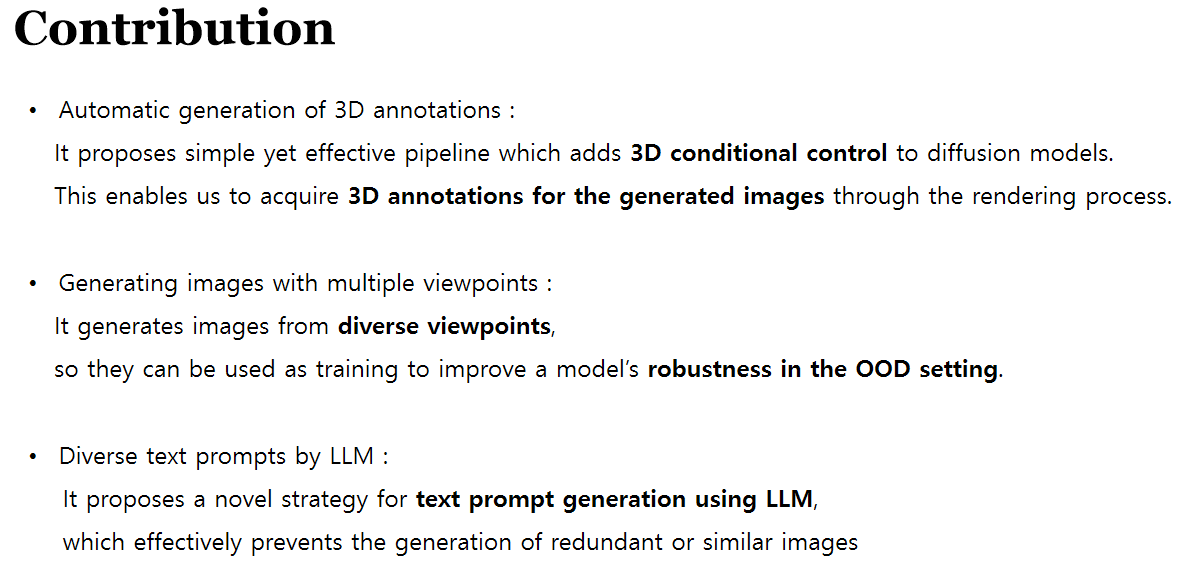

- Contribution :

- 기존 diffusion models의 한계 :

- generated image의 3D geometry를 control할 수 없음.

하려 해도 3D pose key points 등 3D annotations 필요 - 주로 simple text prompts에만 의존

- robust model로 훈련시키기 위해 OOD scenarios가 필요한데,

OOD scenarios를 위한 images를 생성하기 어렵.

- generated image의 3D geometry를 control할 수 없음.

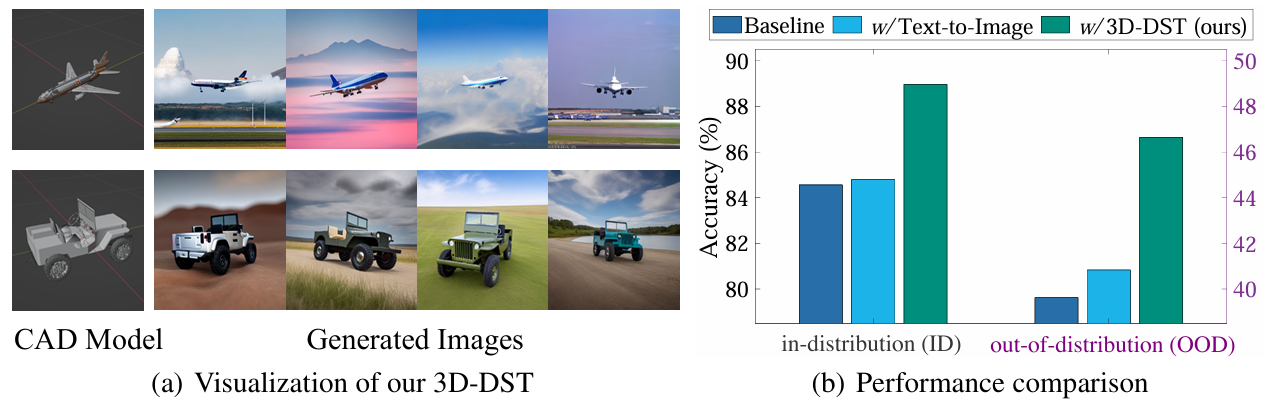

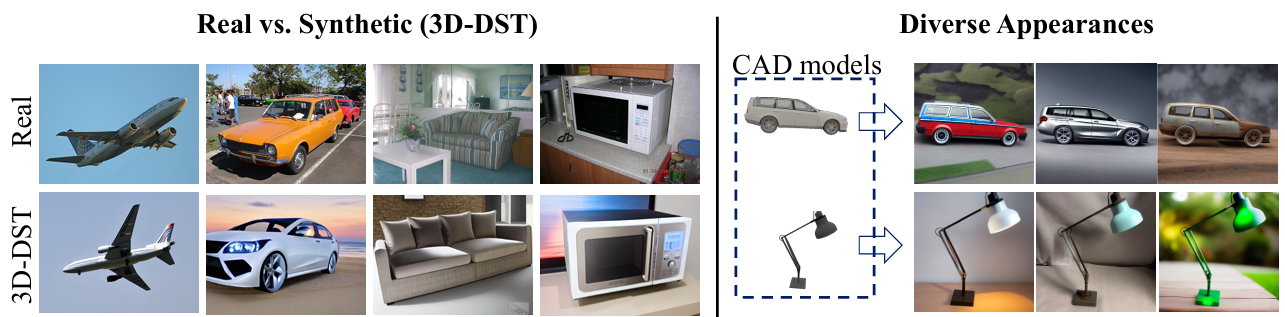

- 본 논문 (3D-DST) :

graphic-based rendering을 통해 3D shape 정보를 가진 3D visual prompts를 만든 뒤 ControlNet에 3D geometry control로 넣어줌으로써

Diffusion model이 specific 3D structure를 가진 images를 generate할 수 있음

즉, shape-aware training process enables Diffusion model to generate new images where its 3D geometry can be explicitly controlled

결과적으로 Diffusion model로 data generation할 때 we can conveniently acquire GT 3D annotations for the generated 2D images - ShapeNet, Pix3D 등 다양한 3D object dataset으로 evaluate함으로써

이전 diffusion model에 비해 본 논문의 3D-aware diffusion model이 generated images’ 3D shape control을 얼마나 잘 하는지 보여줌

(3D shape similarity 등의 metrics로 평가)- 여러 vision tasks (e.g. classification, 3D pose estimation)에서, 그리고 ID and OOD setting 모두에서,

3D-DST data로 pre-train한 뒤 specific vision task에 적용하면 generated images는 높은 performance 보임 - useful for applications like 3D modeling, product design, and VR where the precise 3D object shape is important

- 여러 vision tasks (e.g. classification, 3D pose estimation)에서, 그리고 ID and OOD setting 모두에서,

- 기존 diffusion models의 한계 :

Prompt Generation

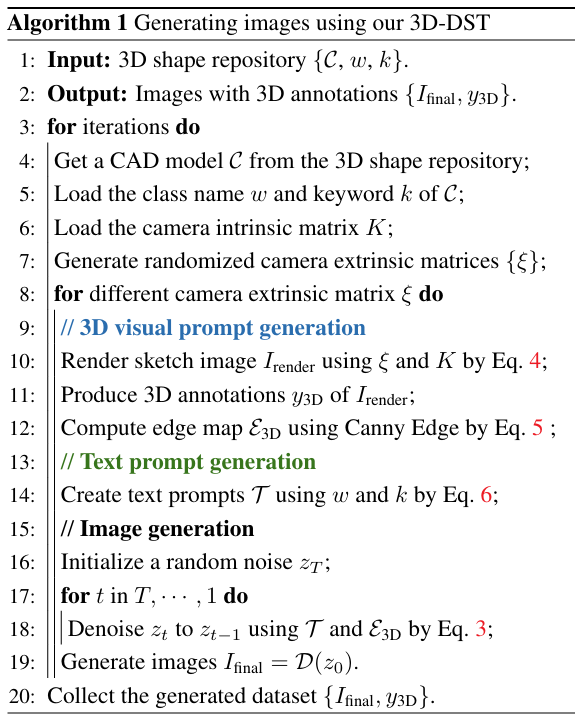

-

3D Visual PromptGeneration :

3D shape repository(e.g. ShapeNet, Objaverse, OmniObject3D)로부터 3D CAD model을 얻은 뒤

3D CAD model로부터 render한 image의edge map을 visual prompt (3D geometry control)로 사용- rendered image by 3D CAD model from ShapeNet, Objaverse, OmniObject3D

\(\rightarrow\) edge map by Canny edge filter

\(\rightarrow\) 3D voxel grid representation by MLP

\(\rightarrow\) combine 3D structure info. from 3D voxel grid features and 2D appearance info. from 2D image features

- rendered image by 3D CAD model from ShapeNet, Objaverse, OmniObject3D

-

Text PromptGeneration :

not only produce images with higher realsim and diversity

but also improve OOD robustness of models pre-trainded on our 3DDST data- initial text prompt : class name \(w\) + keyword of CAD model \(k\)

- text prompt : improve the diversity and richness by LLM(LLaMA)

Result

- Result :

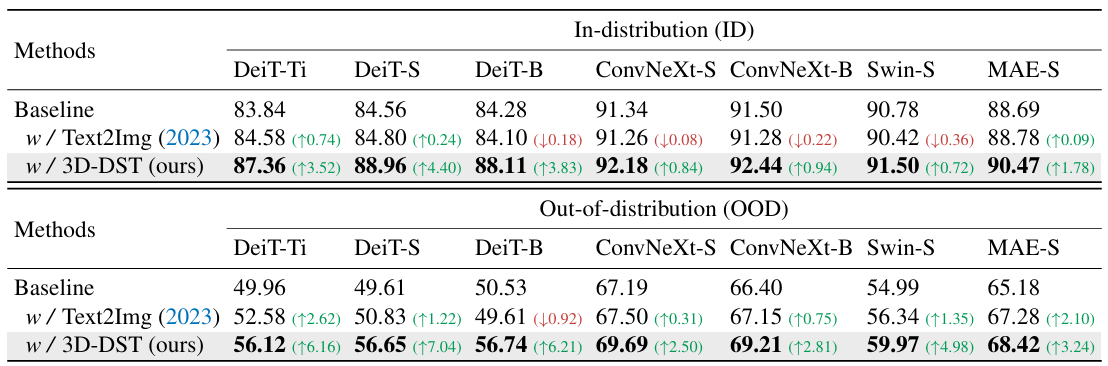

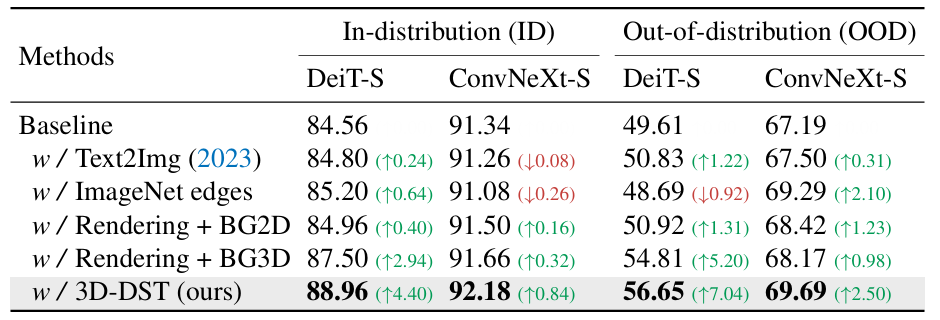

- ID and OOD Image Classification :

- Dataset :

ImageNet-100 (ID), ImageNet-200 (ID), ImageNet-R (OOD) - 3D-DST Data Generation :

각 object class에 대해 ShapeNet과 Objaverse로부터 약 30개의 CAD models를 collect하여

각 object class에 대해 2,500개의 images를 generate - Notation :

- Baseline: pre-train 없이 train on target dataset

- Text2Img: 3D control 없이 Text2Img data로 pre-train한 뒤 fine-tune on target dataset

- 3D-DST : 3D control 있는 3D-DST data로 pre-train한 뒤 fine-tune on target dataset

- LLM : LLM prompt 사용

- Dataset :

- ID and OOD Image Classification :

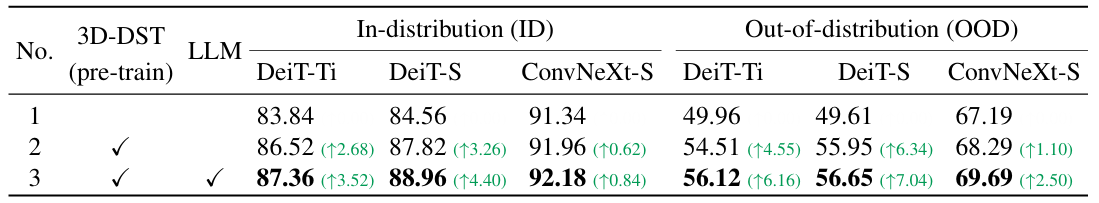

- Ablation Study :

- Data Generation methods on Image Classification :

- Notation :

- ImageNet edges : Diffusion-based로 Data Generate하는 데 ImageNet으로부터 edges 얻어서 condition으로 사용

- Rendering + BG2D : CAD model로부터 rendering해서 Data Generate하는 데 random 2D image bg 사용

- Rendering + BG3D : CAD model로부터 rendering해서 Data Generate하는 데 random 3D environment bg 사용

- Notation :

- Data Generation methods on Image Classification :

- Result :

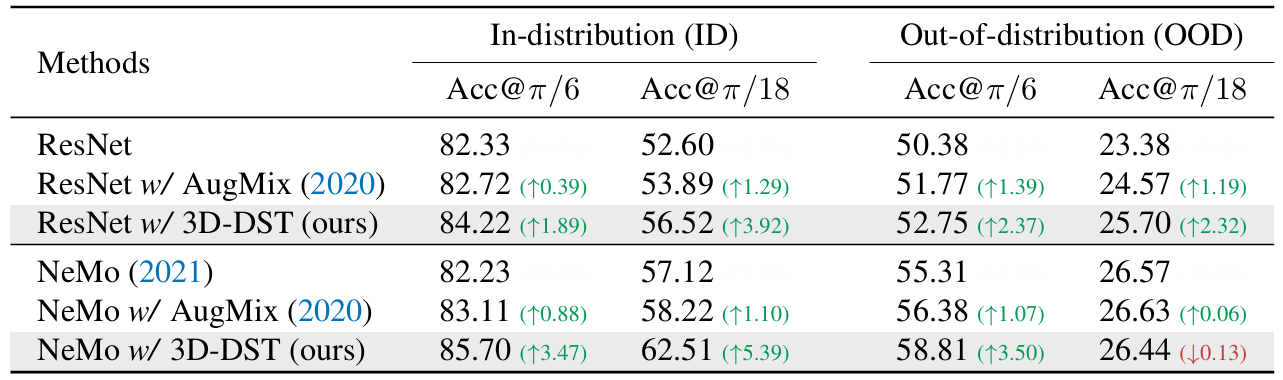

- Robust Category-level 3D Pose Estimation :

- Dataset :

PASCAL3D+, ObjectNet3D, OOD-CV - Notation :

- ResNet : ResNet extended with a pose classification head

- NeMo : SOTA 3D pose estimation model

- 3D-DST : pre-train the model on 3D-DST data

- AugMix : pre-train the model with strong data augmentation (ICLR 2020)

- Dataset :

- Robust Category-level 3D Pose Estimation :

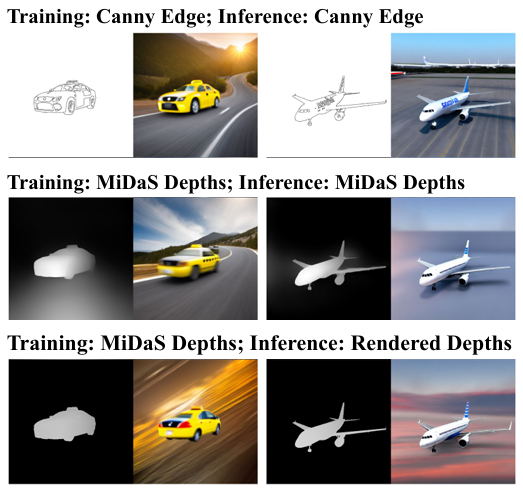

- Ablation Study :

- Types of 3D controls :

edge maps / MiDaS-predicted depths / Blender-rendered depths

(use the same 3D model and text prompts)- result : edge maps 쓰는 게 realism 및 fg/bg clarity 측면에서 가장 좋음

- Types of 3D controls :

- Data Release :

- Aligned CAD models from 1000 classes in ImageNet-1k Link :

ImageNet-1k의 각 class에 대해

ShapeNet, Objaverse, OmniObject3D로부터 3D CAD model을 얻은 뒤

CAD model의 canonical pose를 align - LLM-generated captions for 1000 classes in ImageNet-1k Link

- 3D-DST data for 1000 classes in ImageNet-1k Link :

위의 3D Visual Prompt, Text Prompt를 이용하여 generate한 3D-DST image

- Aligned CAD models from 1000 classes in ImageNet-1k Link :

Limitation

- Failure Case :

- 한계 :

image with challenging and uncommon viewpoints

e.g. car from below, guitar from side - 대응 :

K-fold consistency filter (KCF)를 적용하여

ensemble model의 predictions를 기반으로

good images를 일부 제거하더라도 failed images를 자동으로 제거함으로써

good images의 비율을 높임- KCF는 여전히 limited..

diffusion-generated dataset에서 failed samples를 감지하고 제거하는 건 여전히 challenging problem

- KCF는 여전히 limited..

- 한계 :

- Limitation :

- increased computational cost, complexity, training time, model size

by additional 3D shape encoding- can be a barrier to real-world deployment

- evaluation is focused on 비교적 간단한 3D object datasets

- need to be generalized to more complex, real-world 3D scenes and geometires

- 3D shape control이 다른 desired attributes(photorealism, semantic consistency, coherence 등)와 얼마나 잘 합쳐지는지 balancing하는 건

앞으로 diffusion model architectures 및 training procedures 개선과 함께 고려되어야 함

- increased computational cost, complexity, training time, model size